OpenAI Unveils Sora 2: A New Era for Video Synthesis AI

On Tuesday, OpenAI introduced Sora 2, its cutting-edge video-synthesis AI model, marking a significant advancement in the field of video generation. This second-generation model not only generates videos in various styles but also significantly enhances user interaction through synchronized dialogue and sound effects. The launch was accompanied by a new iOS social app that enables users to insert themselves into AI-generated videos, a feature referred to as "cameos."

Showcasing New Capabilities

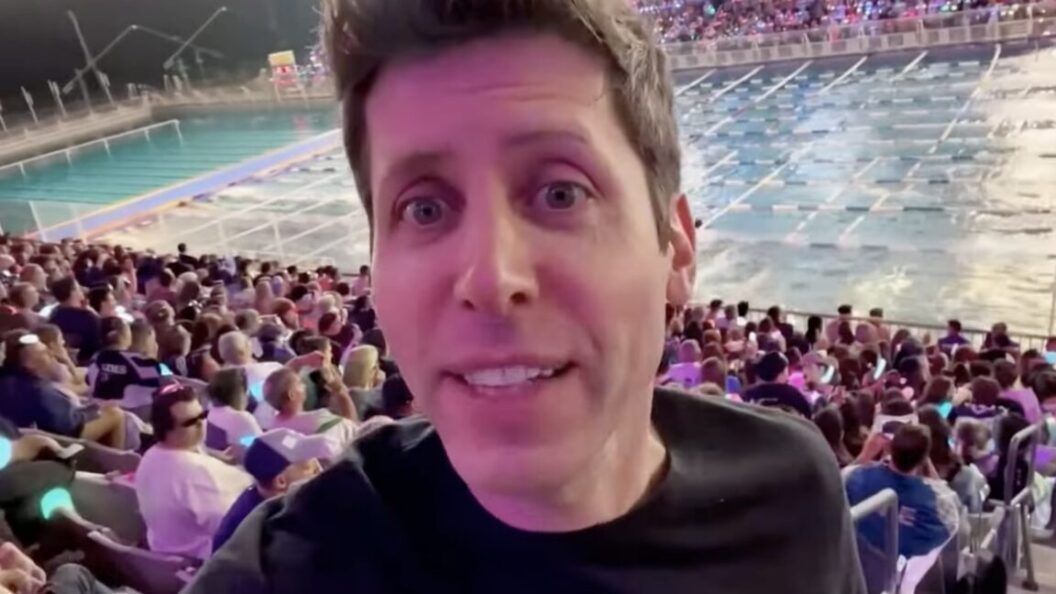

In a demonstration, OpenAI presented a video featuring a photorealistic Sam Altman, the CEO of OpenAI, engaging with viewers amidst imaginative scenes like a ride-on duck race and a glowing mushroom garden. The presentation highlighted Sora 2’s ability to produce "sophisticated background soundscapes, speech, and sound effects with a high degree of realism.”

The evolution in audio-video synchronization is a notable trend in AI development; earlier this year, Google’s Veo 3 pioneered synchronized audio in a major video-synthesis model. Just days prior, Alibaba unveiled its own model, Wan 2.5, that incorporates similar advancements. With Sora 2, OpenAI now joins this competitive space.

Enhanced Visual Consistency and Complexity

Sora 2 includes improvements over its predecessor, focusing on visual consistency and the ability to follow complex instructions across multiple shots while maintaining coherency. OpenAI describes this upgrade as akin to a transformative moment in video, analogous to its breakthrough with text-generation models like ChatGPT.

The updated model reportedly delivers increased physical accuracy, particularly in depicting complex movements such as Olympic gymnastics routines and triple axels, while adhering to realistic physics. This addresses some shortcomings noted in earlier iterations, where objects could behave in unrealistic ways.

Addressing Previous Limitations

OpenAI acknowledges that prior video models frequently fell short in physically accurate depictions. In its announcement, the company noted, "Prior video models are overoptimistic—they will morph objects and deform reality to successfully execute upon a text prompt." For instance, in the previous models, a basketball might spontaneously teleport to the hoop if the player missed a shot. Sora 2 aims to correct such inconsistencies by ensuring that when a player misses, the ball accurately rebounds off the backboard.

Implications and Future Prospects

The launch of Sora 2 not only signifies a leap in the capabilities of video synthesis technology but also raises questions about the potential implications of such advancements. As AI-generated content becomes increasingly realistic, concerns regarding misinformation and the ethical use of such technologies may grow. The ability to seamlessly incorporate oneself into video narratives could redefine personal expression and creativity online while also inviting scrutiny over authenticity.

As OpenAI continues to develop these innovative tools, the industry will keenly watch for their impact on media production, user engagement, and the broader cultural landscape surrounding digital content creation. The integration of synchronized dialogue and realistic soundscapes in Sora 2 represents a pivotal step forward in harnessing AI for creative endeavors, blending technology with artistry in unprecedented ways.

In summary, Sora 2 stands as a testament to OpenAI’s rapid advancement in AI video synthesis, opening new avenues for creativity while also emphasizing the need for responsible implementation as these technologies evolve.